AI stocks detached 150x from reality as bubble forms

/Nvidia added Korea+Sweden+Switzerland GDP yet stock falls on perfect earnings.

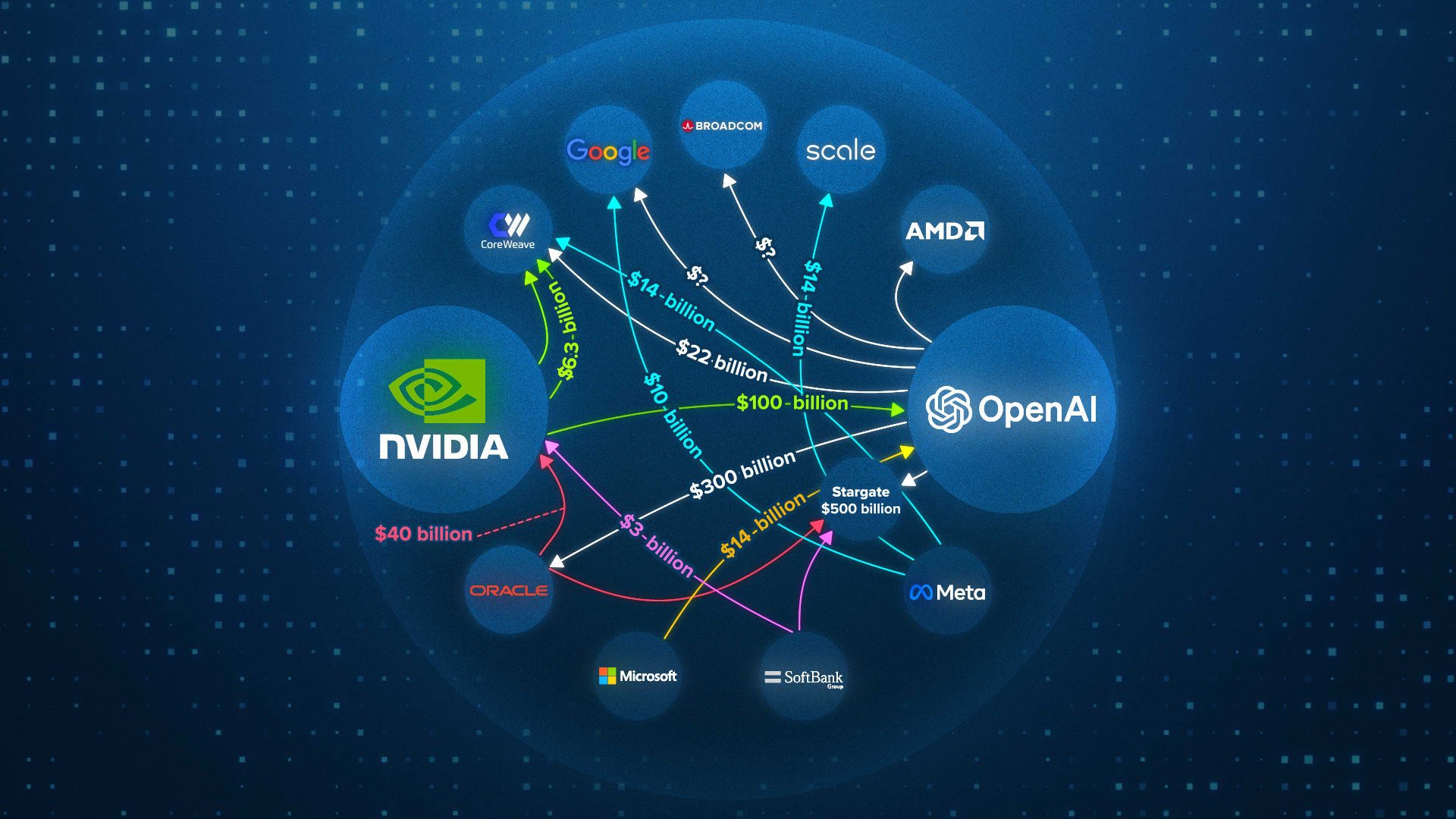

$3.3 trillion floods AI in 18 months. Nvidia adds Korea+Sweden+Switzerland GDP. 70% AI startups have zero revenue while productivity barely moves 1.3%.

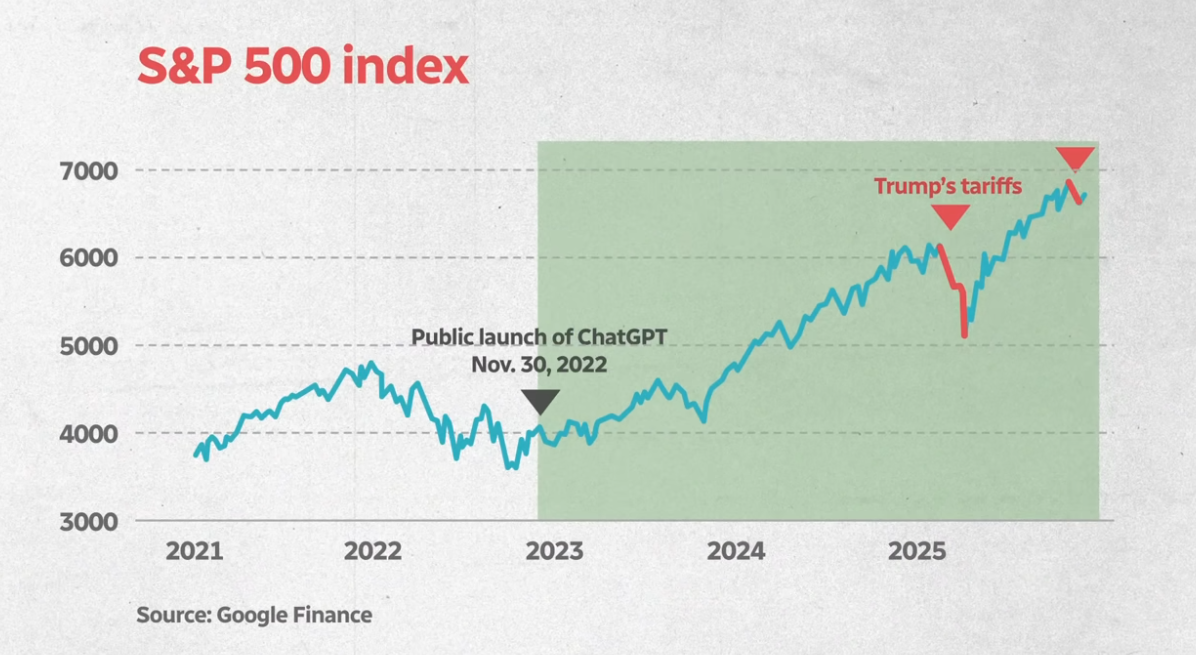

Reflexivity creates self-reinforcing AI bubble bigger than dotco

George Soros's reflexivity theory perfectly explains AI's current insanity—markets aren't measuring reality, they're creating it through a feedback loop where rising prices convince investors that rising prices are the new fundamentals, exactly like Cisco's 86% crash despite being the world's most valuable company in 2000. Since 2023, Nvidia alone added more market cap than South Korea, Sweden, and Switzerland's combined GDP, yet when they posted $57 billion quarterly revenue beating expectations by billions, the stock still fell, dragging the entire S&P 500 down because the market stopped responding to fundamentals and started responding to "narrative tension." As Tom Bilyeu explains:

"Someone posts an LLM breakthrough, a CEO hits a podcast saying the world is about to be rewritten, overnight belief translates into more money flooding a small number of stocks, driving valuations higher which acts as proof AI bullishness is justified."

The terrifying parallel is that between 1998-2000, the NASDAQ jumped 278% not on earnings but on the belief that rising prices were the new reality—80% of IPOs had zero profits, and we know how that ended with Cisco dropping 86% when belief finally collapsed.

Bubble chart exploding 150X red warning Nvidia falling

AI startups burn cash faster than any technology in history

MIT's 2025 report reveals the shocking truth: 95% of Gen AI pilots fail to positively impact P&Ls because costs dwarf benefits, with 70% of AI startups earning zero revenue while trading at 30x multiples versus traditional SaaS at 6x—XAI hits an insane 150x, banking on 150 years of today's revenue. Consider these devastating realities that Tom Bilyeu lays out:

OpenAI lost $5 billion in 2024 despite billions in revenue—they lose MORE money with more customers

AI companies lose "pennies to dollars on every request" due to astronomical energy demands. Each $40,000 Nvidia chip multiplied by tens of thousands, plus cooling and real estate costs

Most AI startups are "thin wrappers around the same four foundational models" with no moat

The productivity paradox is damning: while AI investment exploded 800%, US productivity grew just 1.3% in two years, proving the "economic transformation just hasn't happened yet" despite sky-high valuations acting as if it already has. Media mentions of AI in financial contexts surged from 500 in Q1 2022 to 30,000 by Q3 2023—a 6,000% increase—yet the actual productivity gains remain invisible. Is this the most expensive productivity tool ever attempted, or the most spectacular misallocation of capital in history?

Fiscal dominance traps Fed as $1.1 trillion margin debt fuels mania

The Fed can't raise rates without making government debt unpayable, creating what Bilyeu calls "fiscal dominance"—a structural trap where cheap money floods the system with nowhere to go except chasing AI's narrative returns, pushing margin debt to $1.1 trillion for the first time in history. In 2023, borrowing at 5-6% to get S&P's 26.3% return seemed genius, but now asset prices face double inflation from Fed printing AND margin purchases creating artificial demand for stocks already priced decades into the future. The dotcom lesson is brutal: if you bought NASDAQ at the March 2000 peak, (read “Understanding the Dotcom Bubble: Causes, Impact, and Lessons” to learn more) you waited 15 years just to break even while Amazon fell 95% before rising 100,000%—proving survival, not prediction, creates wealth. Bilyeu's five pillars for navigating this are essential: be humble (the smartest people in 1999 were certain Yahoo and AOL would dominate forever), own infrastructure not narratives (Qualcomm survived and 10x'd while Pets.com vanished in 268 days), bet on real revenue, never use leverage, and hold forever because "the wealth wasn't made by predicting the bubble—it was made by surviving it." Will AI deliver transformation before the bubble bursts, or are we watching the greatest wealth transfer in history unfold?